I looked at the Webinar BlueIris had on this and I have it saving the DeepStack analysis details (.dat file). I also have this setup as a GPU based using my GTX 1080 card so got more than enough headroom I feel.

I was playing with the settings and I have tried 10 images every 500ms, 20 images every 250ms and it seems like it still only analyzes 3 images and says nothing is found. Happening across all my 16cameras even though I had it set to 20images at 250ms

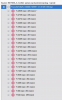

Example below:

Ive seen recommendations of using say 5 images per 1secs or similar, but maybe Im not getting it, but why wouldn't I want to crank it up as much as I can like say 20images every 250ms to give DeepStack as many chances as I have to get it to see if there is an object in that time frame? 20images at 250ms is basically 5secs from the way I see it. I feel the 5 images every 1sec isn't ideal at least for me cause if someone goes out frame in less than 5secs then it may not have captured anything (again thats my thinking).

When it does analyze Ive seen the Python process use about 2-3% GPU load which is nothing so I feel we got plenty of headroom.

I was playing with the settings and I have tried 10 images every 500ms, 20 images every 250ms and it seems like it still only analyzes 3 images and says nothing is found. Happening across all my 16cameras even though I had it set to 20images at 250ms

Example below:

Ive seen recommendations of using say 5 images per 1secs or similar, but maybe Im not getting it, but why wouldn't I want to crank it up as much as I can like say 20images every 250ms to give DeepStack as many chances as I have to get it to see if there is an object in that time frame? 20images at 250ms is basically 5secs from the way I see it. I feel the 5 images every 1sec isn't ideal at least for me cause if someone goes out frame in less than 5secs then it may not have captured anything (again thats my thinking).

When it does analyze Ive seen the Python process use about 2-3% GPU load which is nothing so I feel we got plenty of headroom.