You ARE getting responses from CPAI in the Face recognition module? Means IP address and port should be good.

When you set

Blue Iris to alert on something common (Like cars) you don't get responses.

You say that Blue Iris in not sending requests to CPAI server.

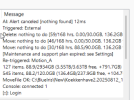

When you look at the log in CPAI, you DO NOT see client requests from Blue Iris.

Like this:

2025-08-22 05:34:50: Response rec'd from Object Detection (YOLOv5 .NET) command 'custom' (#reqid 8764a2cb-afde-4966-b1e0-955d184de65c) ['Found Car'] took 139ms

2025-08-22 05:34:50:

Client request 'custom' in queue 'objectdetection_queue' (#reqid 0a44f603-9b84-4462-8c7e-5b3d245b0a97)

2025-08-22 05:34:50: Request 'custom' dequeued from 'objectdetection_queue' (#reqid 0a44f603-9b84-4462-8c7e-5b3d245b0a97)

2025-08-22 05:34:51: Response rec'd from Object Detection (YOLOv5 .NET) command 'custom' (#reqid 0a44f603-9b84-4462-8c7e-5b3d245b0a97) ['Found Car'] took 143ms

2025-08-22 05:34:51:

Client request 'custom' in queue 'objectdetection_queue' (#reqid 7b5acd9b-8a3c-4eda-b7fd-e172e54dc1ed)

2025-08-22 05:34:51: Request 'custom' dequeued from 'objectdetection_queue' (#reqid 7b5acd9b-8a3c-4eda-b7fd-e172e54dc1ed)

2025-08-22 05:34:51: Response rec'd from Object Detection (YOLOv5 .NET) command 'custom' (#reqid 7b5acd9b-8a3c-4eda-b7fd-e172e54dc1ed) ['Found Car'] took 152ms

2025-08-22 05:34:51: Client request 'custom' in queue 'objectdetection_queue' (#reqid 1712ee8f-0a77-4907-906f-5e4bd37c62fa)

2025-08-22 05:34:51: Request 'custom' dequeued from 'objectdetection_queue' (#reqid 1712ee8f-0a77-4907-906f-5e4bd37c62fa)

2025-08-22 05:34:52: Response rec'd from Object Detection (YOLOv5 .NET) command 'custom' (#reqid 1712ee8f-0a77-4907-906f-5e4bd37c62fa) ['Found Car'] took 153ms

2025-08-22 05:34:52: Client request 'custom' in queue 'objectdetection_queue' (#reqid 3dd0ebe3-2bd1-432c-b71b-1918d888a722)

2025-08-22 05:34:52: Request 'custom' dequeued from 'objectdetection_queue' (#reqid 3dd0ebe3-2bd1-432c-b71b-1918d888a722)

2025-08-22 05:34:52: Response rec'd from Object Detection (YOLOv5 .NET) command 'custom' (#reqid 3dd0ebe3-2bd1-432c-b71b-1918d888a722) ['Found Car'] took 166ms

If you DO NOT see client request in the CPAI log, then you can actually verify what Blue Iris is sending by doing a packet capture. The calls and responses are http calls. (Documented somewhere in CPAI docs, don't know where off hand).

See this:

This article provides an overview of the Packet Monitor (Pktmon) network diagnostics tool and its uses.

learn.microsoft.com

If Blue Iris IS NOT sending requests for object detection, then the configuration in the camera / Blue Iris is wrong. (Do one camera at a time. Change one thing at a time.)

If that is the case I would suggest disable all but one camera, (maybe point at the street, detect cars), work on getting that one to work.

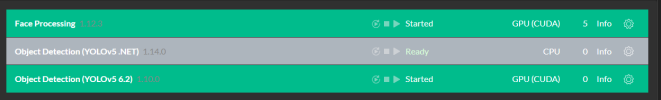

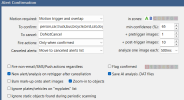

I will send screenshots of my configuration that I have been using for years. (I used to disable custom models and switch back and forth between CPAI and Deepstack just by changing the IP address and port.

Like some others have said, running CPAI on a linux machine or VM is throwing a lot more complication into the mix. Trust me, running CPAI on the same box as the Blue Iris is much easier. Version 2.9.5 has been pretty good for me, I've gotten 900,000 inferences before rebooting the machine. ( I did have an issue last week where CPAI quit for no apparent reason however.)

This version has been running on my Windows 11 box for almost eight months.